Update: 3/17/21 Please note that the Camera.AddCommandBuffer API does not work with Unity’s ScriptableRenderPipelines. A somewhat analogous approach in URP is Renderer Features.

CommandBuffers have been around for a bit now, since Unity 5.0, anyway. But despite their age they seem to be lacking in real coverage around the development community. That is unfortunate, because they are extremely useful and not all that hard to understand. Consequently, they are also one of my favourite features of the engine.

So what are CommandBuffers, exactly? Well, it’s right there in the name. They are a list of rendering commands that you build up for later use: a buffer of commands. CommandBuffers operate under the concept of delayed execution, so when you call CommandBuffer.DrawRenderer, you aren’t actually drawing anything in that instant, but rather adding that command to a buffer to be executed later.

CommandBuffers can be used in a few different ways, attached to a Light or Camera at special events, or executed manually via the Graphics API. Each way serves a different purpose. For instance, if you only need to update a render target occasionally, perhaps based on an event or user input, you could execute it manually through Graphics.ExecuteCommandBuffer. Or maybe you need to update a render target every frame before you draw any Transparent geometry, you could attach the CommandBuffer to a Camera at CameraEvent.AfterForwardOpaque. If you are doing something like writing information into a shadow map, you’ll likely be attaching it to a Light via a LightEvent.

For convenience, I’ll be posting the complete code to the GitHub Project as I work through these tutorials. I’m currently using Unity 2018.1b4, the latest at the time that I started writing this.

Basic Example of a CommandBuffer

In order to do much of anything with a CommandBuffer, you’ll likely need a RenderTexture to work with. Now, you don’t actually use a RenderTexture directly here, but rather a RenderTargetIdentifier serving as a handle. You can create one of these simply by invoking its constructor with a RenderTexture as a reference, if you know what RenderTexture you’ll be working with. If you don’t, probably because you’re doing something with a temporary RenderTexture, you can use a string property name (read: Shader Property such as ‘_MainTex’) or integer from Shader.PropertyToID.

So this is probably the most basic thing to do with a CommandBuffer:

void OnEnable()

{

CommandBuffer commandBuffer = new CommandBuffer();

commandBuffer.name = "Clear to Red";

commandBuffer.SetRenderTarget(BuiltinRenderTextureType.CameraTarget);

commandBuffer.ClearRenderTarget(true, true, Color.red, 1f);

GetComponent<Camera>().AddCommandBuffer(CameraEvent.AfterEverything, commandBuffer);

}

This simply clears the attached Camera’s target RenderTexture, in the case of our Main Camera, our main frame buffer. Not very interesting, but that’s basically the minimum code to get a CommandBuffer working.

In this case, we aren’t manually executing the CommandBuffer, but rather adding it to a Camera to be executed at a particular point of its render loop.

RemoveCommandBuffer method, but you must maintain a reference to the CommandBuffer and remember what event it was attached at.If you run this code and view the Camera in the Inspector, you’ll be able to see the attached CommandBuffer. This reports the size of the CommandBuffer as well, which can be useful for optimizing.

The Camera’s Inspector will report the attached CommandBuffers.

A More Practical Example

You can draw via Renderer, Mesh or procedural geometry through a CommandBuffer. You may want to do this to draw to the active frame buffer, or perhaps an off-screen buffer for use in a post-process effect. I tend to use the later quite often, so I’ll focus on that.

As I mentioned before, you’ll need a RenderTexture and respective RenderTargetIdentifier to pass to the CommandBuffer. For simplicity’s sake, I won’t worry about many of the overloads.

RenderTexture renderTexture = new RenderTexture(camera.pixelWidth, camera.pixelHeight, 0); RenderTargetIdentifier rtID = new RenderTargetIdentifier(renderTexture); CommandBuffer commandBuffer = new CommandBuffer(); commandBuffer.name = "Draw Renderer"; commandBuffer.SetRenderTarget(rtID); commandBuffer.ClearRenderTarget(true, true, Color.clear, 1f); commandBuffer.DrawRenderer(targetRenderer, targetRenderer.sharedMaterial); // cameraEvent = CameraEvent.BeforeForwardOpaque camera.AddCommandBuffer(cameraEvent, commandBuffer);

This assumes a Renderer called targetRenderer and its sharedMaterial. I’ve also elected to attach this CommandBuffer at CameraEvent.BeforeForwardOpaque. Out of the box, this won’t work with *every* Material, which I will explain later. For a test object, I’m just using a Sphere primitive with a Material using the Shader ‘Unlit/Color’.

Because we are just writing to a RenderTexture that isn’t the main framebuffer, we actually won’t see any effect if we run this code, except that the CommandBuffer is executing by the Camera’s Inspector like before. We can debug this really quick by adding OnRenderImage to our script.

void OnRenderImage(RenderTexture src, RenderTexture dest)

{

Graphics.Blit(renderTexture, dest);

}

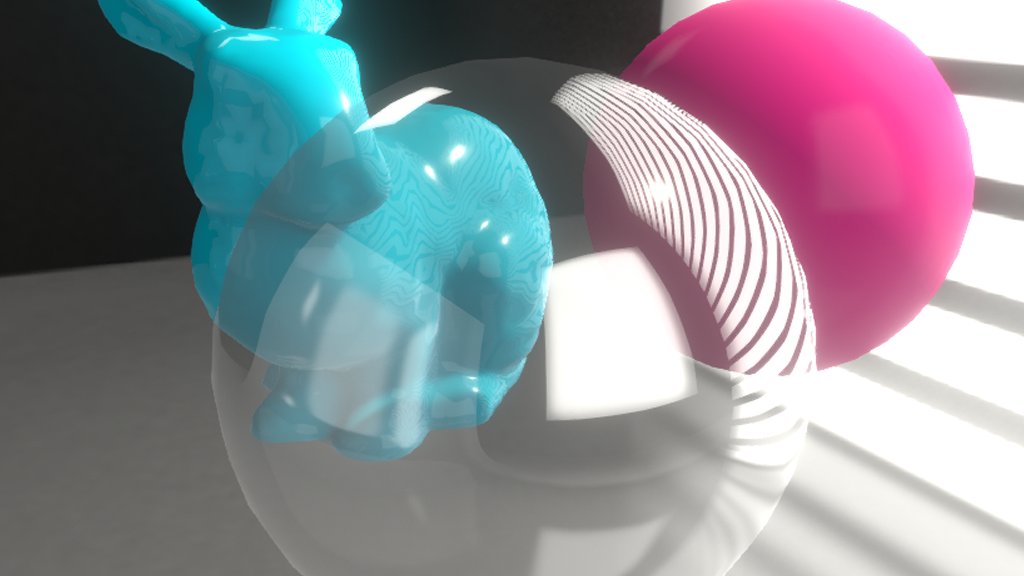

And the result is as expected. Since we’re rendering the Sphere with the same Material that we use to render to the main framebuffer, and since the CommandBuffer is executed from within the Main Camera’s loop, its properties will still be applied. This scaffolding can be assumed when using a CommandBuffer in this way, and with similar behaviour if writing one for Lights.

Some Loose Ends

Now, as I mentioned before, at this point our CommandBuffer won’t work out of the box with every Shader. For instance, simply switch from ‘Unlit/Color’ to ‘Standard’ and see that it doesn’t look right. This is because of a couple reasons.

First, I elected to use the simplest overload for CommandBuffer.DrawRenderer, which in the case of the Standard Shader is not invoking the correct Shader Pass by default. Simply using a different overload and exposing some values will get us part of, but not all the way there.

commandBuffer.DrawRenderer(targetRenderer, targetRenderer.sharedMaterial, subMeshID, shaderPass);

In this case I’m just leaving those values as 0 for both, but upon running this, it already produces a different result. It’s still wrong, though, because our lighting doesn’t appear to be coming from the correct direction.

As I mentioned above, we are assuming that the Camera’s scaffolding (read: constant buffers) are correctly populated. At the current state in the Camera’s loop, CameraEvent.BeforeForwardOpaque, these constant buffers are not correct, or rather, not what we are anticipating them to be. If we change the event to something just a little bit later like CameraEvent.AfterForwardOpaque, things will likely look a lot better.

For fun, if you quickly switch the shaderPass from 0 to 1, you’ll see that it is now ignoring any GI lighting. That’s because we’ve switched from the Standard Shader’s ForwardBase to its ForwardAdd Pass, where GI is not factored in for additive lighting.

Wrapping Up

At this point, we’ve created a CommandBuffer that can draw a specified Renderer and its Material to an offscreen RenderTexture. This is a good place to leave off for now.

Future works outlining CommandBuffers will be featured in later posts and on the GitHub Project.

Feedback would be greatly appreciated as I’m getting into writing these.

Great stuff. I can’t believe this awesome feature is so obscure on the interwebz, so I’m glad you’re picking this up.

Please do a part 2!